WordPress is an open source Content Management System (CMS), which allows the users to build dynamic websites and blog. WordPress is the most popular blogging system on the web and allows updating, customizing and managing the website from its back-end CMS and components.

Monday, 16 November 2020

Tuesday, 10 November 2020

Explain how the buffer is used in Amazon web services?

Mention what the difference between Amazon S3 and EC2 is?

| EC2 | S3 |

|

|

|

|

AWS interview preperation:

what is aws?

Amazon Web Services (AWS) is a cloud service from Amazon, which provides services in the form of building blocks, these building blocks can be used to create and deploy any type of application in the cloud.

what is security in aws ?

group in aws?

security group in aws?

A security group is an AWS firewall solution that performs one primary function: to filter incoming and outgoing traffic from an EC2 instance. It accomplishes this filtering function at the TCP and IP layers, via their respective ports, and source/destination IP addresses.

policies in aws?

You manage access in AWS by creating policies and attaching them to IAM identities (users, groups of users, or roles) or AWS resources. A policy is an object in AWS that, when associated with an identity or resource, defines their permissions. AWS evaluates these policies when an IAM principal (user or role) makes a request.

s3 bucket in aws?

Amazon S3 is listed top in the AWS services list - because, storing and retrieving the data plays a prominent role in cloud computing. So, AWS offers a wonderful service called Amazon Simple Storage Service or Amazon S3 to store and retrieve data from the cloud. S3 allows the user to store, upload, retrieve large files up to 5 TB from the cloud. It is a scalable, low-cost and high-speed web-based service designed for archival and online backup of application programs and data. Using S3, the user can access the same system that Amazon uses to run its website. Users have control over the public or private accessibility of the data.

which services available?

few services we can say like s3,ec2,dyname Db,lambda,cloudfront etc

use of terraform?

It is a IAC tool which is used to automate cloud without human intervention in the environment

what is group in aws?

Groups let you specify permissions for multiple users,

which can make it easier to manage the permissions for those users.

For example, you could have a group called Admins and give that group

the types of permissions that administrators typically need.

what is role and policy in aws?

A policy is an object in AWS that, when associated with an identity or resource,

defines their permissions. AWS evaluates these policies when an IAM principal (user or role)

makes a request. Permissions in the policies determine whether the request is allowed or denied

What is the difference between groups and roles in AWS?

An IAM group is primarily a management convenience to manage the same set of permissions

for a set of IAM users. An IAM role is an AWS Identity and Access Management (IAM) entity

with permissions to make AWS service requests. ... Use IAM roles to delegate access within or between AWS accounts.

ec2 types?

micro

medium

large

How can you send a request to Amazon S3?

What is Lambda?

- Lambda is used to encapsulate Data centres, Hardware, Assembly code/Protocols, high-level languages, operating systems, AWS APIs.

- Lambda is a compute service where you can upload your code and create the Lambda function.

- Lambda takes care of provisioning and managing the servers used to run the code.

- While using Lambda, you don't have to worry about scaling, patching, operating systems, etc.

What is CloudWatch?

- CloudWatch is a service used to monitor your AWS resources and applications that you run on AWS in real time. CloudWatch is used to collect and track metrics that measure your resources and applications.

- It displays the metrics automatically about every AWS service that you choose.

- You can create the dashboard to display the metrics about your custom application and also display the metrics of custom collections that you choose.

- You can also create an alarm to watch metrics. For example, you can monitor CPU usage, disk read and disk writes of Amazon EC2 instance to determine whether the additional EC2 instances are required to handle the load or not. It can also be used to stop the instance to save money.

Following are the terms associated with CloudWatch:

- Dashboards: CloudWatch is used to create dashboards to show what is happening with your AWS environment.

- Alarms: It allows you to set alarms to notify you whenever a particular threshold is hit.

- Logs: CloudWatch logs help you to aggregate, monitor, and store logs.

- Events: CloudWatch help you to respond to state changes to your AWS resources.

What is Redshift?

- Redshift is a fast and powerful, fully managed, petabyte-scale data warehouse service in the cloud.

OLAP

OLAP is an Online Analytics Processing System used by the Redshift.

OLAP transaction Example:

Suppose we want to calculate the Net profit for EMEA and Pacific for the Digital Radio Product. This requires to pull a large number of records. Following are the records required to calculate a Net Profit:

- Sum of Radios sold in EMEA.

- Sum of Radios sold in Pacific.

- Unit cost of radio in each region.

- Sales price of each radio

- Sales price - unit cost

The complex queries are required to fetch the records given above. Data Warehousing databases use different type architecture both from a database perspective and infrastructure layer.

Redshift Configuration

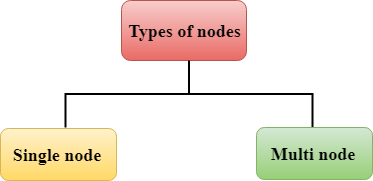

Redshift consists of two types of nodes:

- Single node

- Multi-node

Single node: A single node stores up to 160 GB.

Multi-node: Multi-node is a node that consists of more than one node. It is of two types:

- Leader Node

It manages the client connections and receives queries. A leader node receives the queries from the client applications, parses the queries, and develops the execution plans. It coordinates with the parallel execution of these plans with the compute node and combines the intermediate results of all the nodes, and then return the final result to the client application. - Compute Node

A compute node executes the execution plans, and then intermediate results are sent to the leader node for aggregation before sending back to the client application. It can have up to 128 compute nodes.

What is SQS?

- SQS stands for Simple Queue Service.

- SQS was the first service available in AWS.

- Amazon SQS is a web service that gives you access to a message queue that can be used to store messages while waiting for a computer to process them.

- Amazon SQS is a distributed queue system that enables web service applications to quickly and reliably queue messages that one component in the application generates to be consumed by another component where a queue is a temporary repository for messages that are awaiting processing.

- With the help of SQS, you can send, store and receive messages between software components at any volume without losing messages.

Suppose the user wants to look for a package holiday and wants to look at the best possible flight. AUser types a query in a browser, it then hits the EC2 instance. An EC2 instance looks "What the user is looking for?", it then puts the message in a queue to the SQS. An EC2 instance pulls queue. An EC2 instance continuously pulling the queue and looking for the jobs to do. Once it gets the job, it then processes it. It interrogates the Airline service to get all the best possible flights. It sends the result to the web server, and the web server sends back the result to the user. A User then selects the best flight according to his or her budget.

If we didn't have SQS, then what happened?

A web server passes the information to an application server and then application server queried an Airline service. If an Application server crashes, then a user loses its query. One of the great thing about SQS is that data is queued in the SQS even if the application server crashes, the message in the queue is marked as an invisible in a timeout interval window. When the timeout runs out, message reappears in the queue; then a new EC2 instance can use this message to perform its job. Therefore, we can say that SQS removes the application server dependency.

What are the most used of Top 10 AWS Services?

Service #1 - Amazon S3

Service #2 - Amazon EC2 [Elastic Compute Cloud]

Service #3 - AWS Lambda

Service #4 - Amazon Glacier

Service #5 - Amazon SNS

Service #6 - Amazon CloudFront

Service #7 - Amazon EBS [Elastic Block Store]

Service #8 - Amazon Kinesis

Service #9 - Amazon VPC

Service #10 - Amazon SQS

Sunday, 26 April 2020

What is a heartbeat in HDFS?

How is Spark different than Hadoop?

Basic understanding about Spark

1.What is Apache Spark?

Apache Spark is a cluster computing framework which runs on a cluster of commodity hardware and performs data unification i.e., reading and writing of wide variety of data from multiple sources. In Spark, a task is an operation that can be a map task or a reduce task. Spark Context handles the execution of the job and also provides API’s in different languages i.e., Scala, Java and Python to develop applications and faster execution as compared to MapReduce.2. How is Spark different from MapReduce? Is Spark faster than MapReduce?

- There is no tight coupling in Spark i.e., there is no mandatory rule that reduce must come after map.

- Spark tries to keep the data “in-memory” as much as possible.

3. Explain the Apache Spark Architecture. How to Run Spark applications?

- Apache Spark application contains two programs namely a Driver program and Workers program.

- A cluster manager will be there in-between to interact with these two cluster nodes. Spark Context will keep in touch with the worker nodes with the help of Cluster Manager.

- Spark Context is like a master and Spark workers are like slaves.

- Workers contain the executors to run the job. If any dependencies or arguments have to be passed then Spark Context will take care of that. RDD’s will reside on the Spark Executors.

- You can also run Spark applications locally using a thread, and if you want to take advantage of distributed environments you can take the help of S3, HDFS or any other storage system.